Jupyter Snippet CB2nd 08_cuda

Jupyter Snippet CB2nd 08_cuda

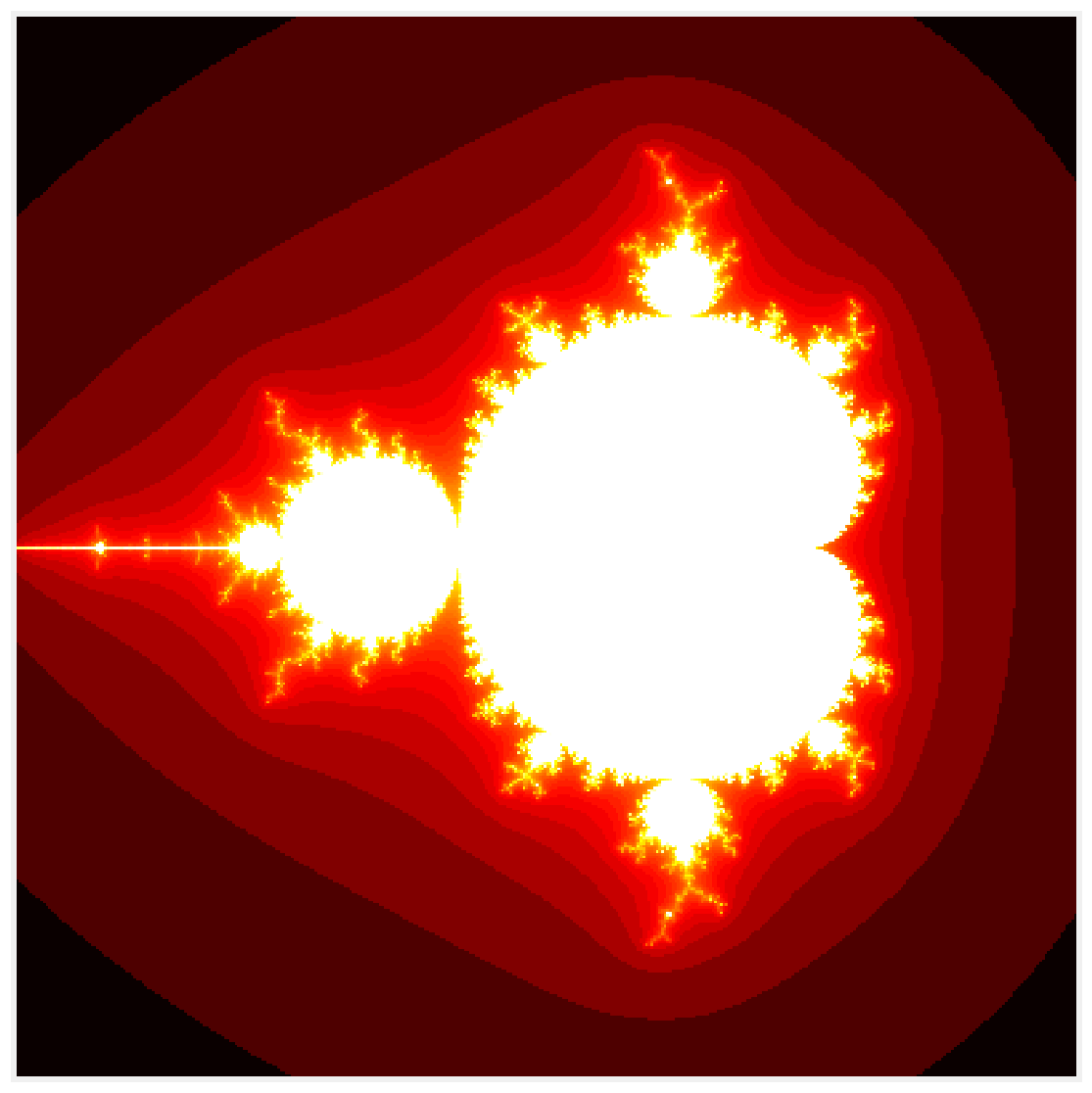

5.8. Writing massively parallel code for NVIDIA graphics cards (GPUs) with CUDA

import math

import numpy as np

from numba import cuda

import matplotlib.pyplot as plt

%matplotlib inline

len(cuda.gpus)

1

cuda.gpus[0].name

b'GeForce GTX 980M'

@cuda.jit

def mandelbrot_numba(m, iterations):

# Matrix index.

i, j = cuda.grid(2)

size = m.shape[0]

# Skip threads outside the matrix.

if i >= size or j >= size:

return

# Run the simulation.

c = (-2 + 3. / size * j +

1j * (1.5 - 3. / size * i))

z = 0

for n in range(iterations):

if abs(z) <= 10:

z = z * z + c

m[i, j] = n

else:

break

size = 400

iterations = 100

m = np.zeros((size, size))

# 16x16 threads per block.

bs = 16

# Number of blocks in the grid.

bpg = math.ceil(size / bs)

# We prepare the GPU function.

f = mandelbrot_numba[(bpg, bpg), (bs, bs)]

f(m, iterations)

fig, ax = plt.subplots(1, 1, figsize=(10, 10))

ax.imshow(np.log(m), cmap=plt.cm.hot)

ax.set_axis_off()

%timeit -n10 -r100 f(m, iterations)

2.99 ms ± 173 µs per loop (mean ± std. dev. of 100 runs,

10 loops each)

%timeit -n10 -r100 cuda.to_device(m)

481 µs ± 106 µs per loop (mean ± std. dev. of 100 runs,

10 loops each)

%%timeit -n10 -r100 m_gpu = cuda.to_device(m)

f(m_gpu, iterations)

101 µs ± 11.8 µs per loop (mean ± std. dev. of 100 runs,

10 loops each)

m_gpu = cuda.to_device(m)

%timeit -n10 -r100 m_gpu.copy_to_host()

238 µs ± 67.8 µs per loop (mean ± std. dev. of 100 runs,

10 loops each)

# Thread id in a 1D block

tx = cuda.threadIdx.x

# Block id in a 1D grid

ty = cuda.blockIdx.x

# Block width, i.e. number of threads per block

bw = cuda.blockDim.x

# Compute flattened index inside the array

pos = tx + ty * bw

if pos < an_array.size: # Check array boundaries

# One can access `an_array[pos]`